Cheng-Kuang (Brian) Wu

Senior Research Scientist, Appier AI Research

Short Bio

I am a full-time research scientist at Appier AI Research Team, where I work with advisors Prof. Yun-Nung Chen and Prof. Hung-yi Lee. Previously, I earned my Master’s degree in Computer Science at National Taiwan University (NTU), where I was advised by Prof. Hsin-Hsi Chen, leader of the NLPLab. I am also a licensed medical doctor, having obtained my Doctor of Medicine (M.D.) degree from NTU.

Research Interests

My research is guided by a simple philosophy: all human beings deserve to flourish and realize their full potential, and artificial intelligence (AI) has the potential to help achieve this ideal. Therefore, I believe that AI research should not only be innovative, but also purposefully aligned with benefits to humanity. My research interests center on two tightly coupled directions:

- Capability: overcoming limitations of current AI systems (e.g., continual learning, specifically loss of plasticity and catastrophic forgetting in deep learning) so that future AIs can address the complex challenges humans will face; and

- Safety, reliability, and robustness: ensuring that increasingly powerful systems behave in aligned, trustworthy, and dependable ways across diverse scenarios.

I view advanced AI as a transformative technology with both tremendous upside and existential risk, making it the most important technical problem of our time. Progress therefore requires advancing capability and safety in tandem, with careful preparation for rapidly increasing capabilities, as novel breakthroughs often introduce new risks. For example, progress in continual learning may open up new attack surfaces for injecting malicious content into AI systems.

In my past research, I have studied various topics related to language models (LMs), such as benchmarking continual learning, uncertainty awareness, and the robustness issues. The full list of my publications is available on my Google Scholar.

Recent Favorite Works

To give a concrete sense of my research tastes, here are some of my favorite works and blogs:

- The Emerging Science of Machine Learning Benchmarks (Hardt, 2025)

- The Jailbreak Tax: How Useful are Your Jailbreak Outputs? (Nikolić et al., 2025)

- Training on the Test Task Confounds Evaluation and Emergence (Dominguez-Olmedo et al., 2024)

- Unfamiliar Finetuning Examples Control How Language Models Hallucinate (Kang et al., 2024)

- Physics of Language Models (Allen-Zhu et al., 2024)

My Favorite Quote

Research is the search for reality. It is a wonderful search. It keeps us humble. Authentic humility is striving to see things how they are, rather than how we want them to be. - by Kevin Gimpel in his advice on being a happier researcher

News

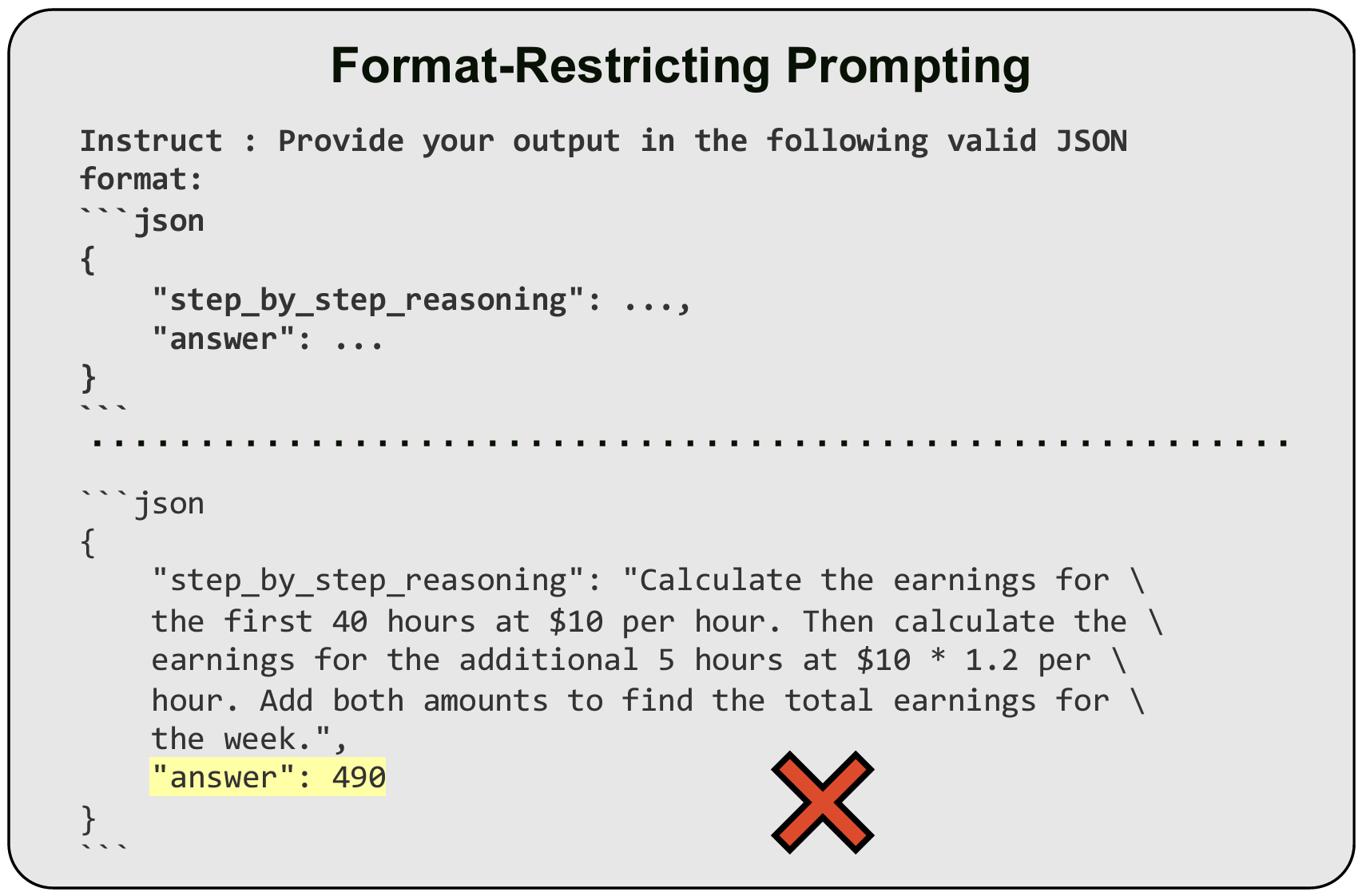

| Oct 01, 2024 | Our Let Me Speak Freely paper is accepted by EMNLP 2024 Industry Track. See you in Miami 🇺🇸! |

|---|---|

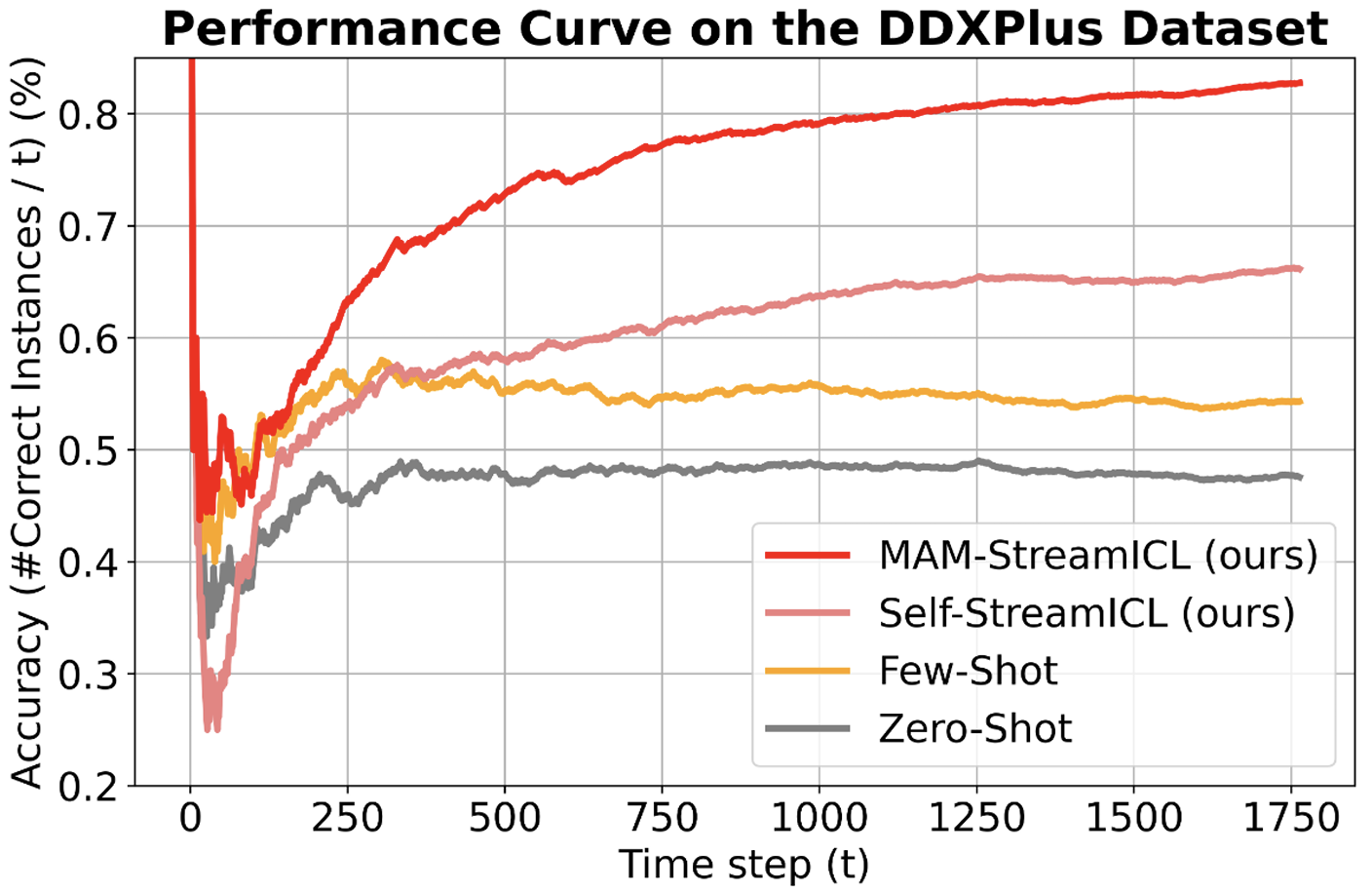

| Sep 26, 2024 | Our StreamBench paper is accepted by NeurIPS 2024 Datasets and Benchmarks Track. See you in Vancouver 🇨🇦! |

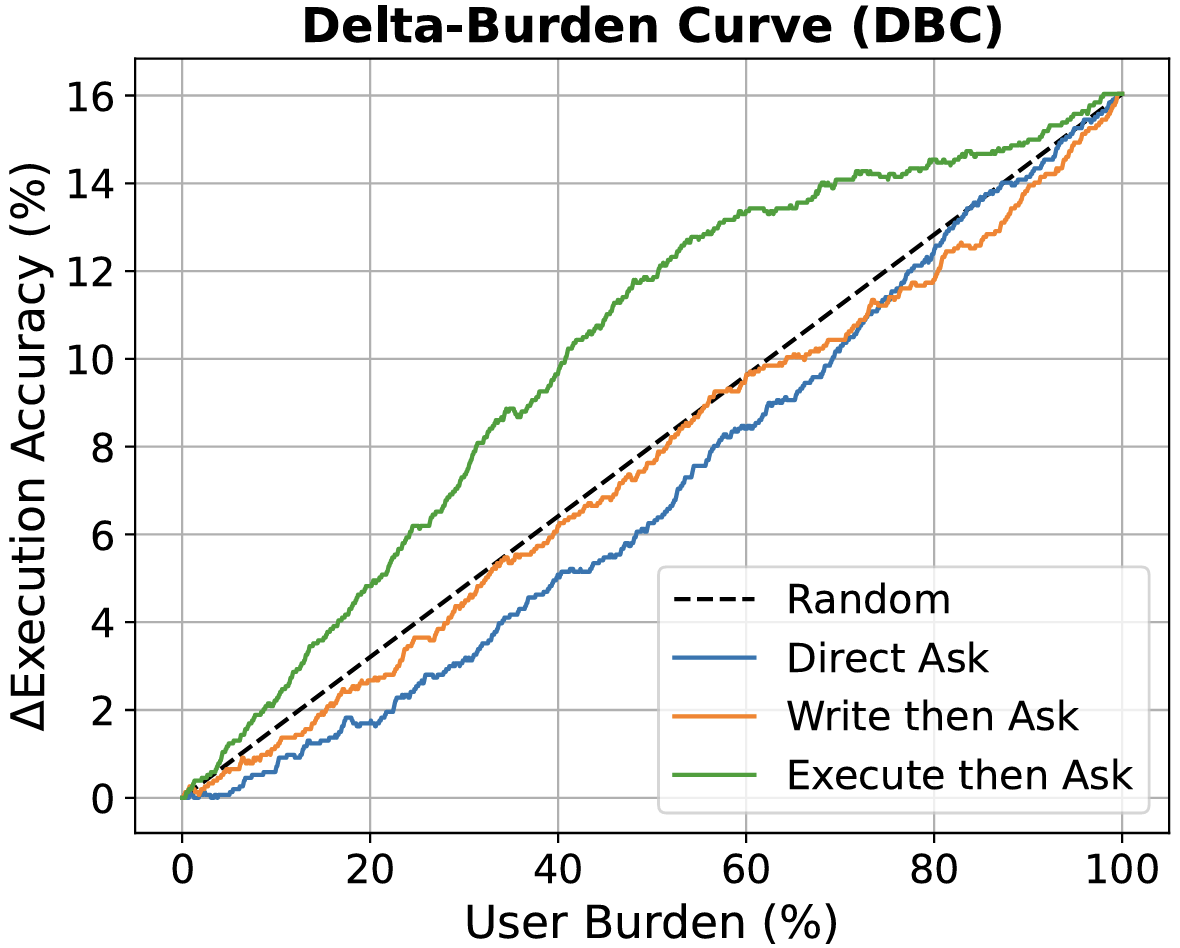

| Sep 20, 2024 | Our I Need Help paper is accepted by EMNLP 2024 Main Conference. See you in Miami 🇺🇸! |

Latest Posts

| Jan 04, 2025 | Test Github Workflow |

|---|---|

| Dec 22, 2024 | NeurIPS 2024 Reflections |

| May 01, 2024 | a post with tabs |